RoboCar 1/10シリーズとは...

"2009年の発売開始以来、自動車/部品メーカー、大学等研究教育機関において、自動運転の研究・開発やAI人材育成など様々な用途でご活用いただいており、販売推計台数としては600台以上です。自動車の1/10スケールの車両に、単眼カメラ、レーザレンジセンサーを搭載し、加速度・ジャイロセンサー、エンコーダにより車両の挙動や走行距離の把握が可能です。また、各種センサー情報の取得、速度・操舵角制御、通信などのライブラリを用意しており、これらを用いてお客様が自由にアプリケーションを開発することが可能です。"

"2009年の発売開始以来、自動車/部品メーカー、大学等研究教育機関において、自動運転の研究・開発やAI人材育成など様々な用途でご活用いただいており、販売推計台数としては600台以上です。自動車の1/10スケールの車両に、単眼カメラ、レーザレンジセンサーを搭載し、加速度・ジャイロセンサー、エンコーダにより車両の挙動や走行距離の把握が可能です。また、各種センサー情報の取得、速度・操舵角制御、通信などのライブラリを用意しており、これらを用いてお客様が自由にアプリケーションを開発することが可能です。"

自動運転/AI技術開発用ロボットカー RoboCar 1/10 Pro

自動運転開発プラットフォーム RoboCar 1/10 Pro

自動運転・ADAS開発ツールの最新機種!

ROS2に対応したNVIDIA Jetson AGX Orinを搭載

概要・特徴

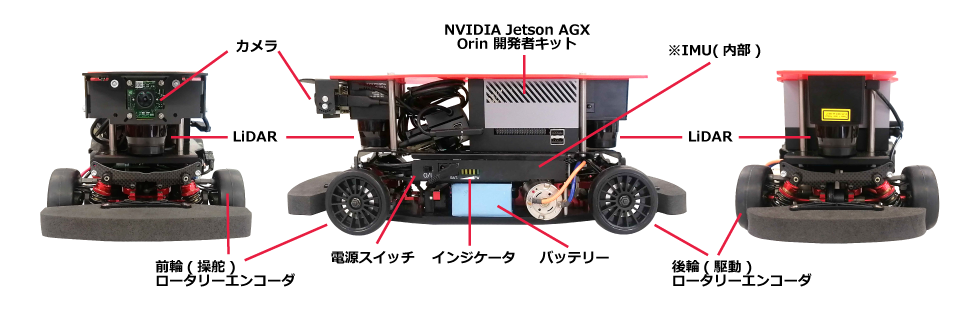

最新機種となるRoboCar 1/10 Proは、GPU搭載のNVIDIA Jetson AGX Orin開発者キットを採用、高度なAIアルゴリズムの実装が可能です。また、ROS2(Robot Operating System 2)をはじめ自動運転、AIアプリケーションのための各種OS、ライブラリがインストール済みで、開梱したその日から開発を始めることが可能です。

その特徴として

・実車の1/10スケールにより室内で実験が可能

・NVIDIAプロセッサーにより、画像認識・AI学習を活用した自動運転開発が容易に

・追加I/Oを活用したセンサーの追加搭載や無線通信を活用した連携機能の開発も

導入したその日から、自動運転の開発と実験を始められる開発プラットフォームです。

自動運転の研究開発でも使用されている、ロボット用オープンソースソフトウェアプラットフォームROS2(Robot Operating System 2)にも対応。世界中で開発された様々なソフトウェアの利用が可能です。

その特徴として

・実車の1/10スケールにより室内で実験が可能

・NVIDIAプロセッサーにより、画像認識・AI学習を活用した自動運転開発が容易に

・追加I/Oを活用したセンサーの追加搭載や無線通信を活用した連携機能の開発も

導入したその日から、自動運転の開発と実験を始められる開発プラットフォームです。

自動運転の研究開発でも使用されている、ロボット用オープンソースソフトウェアプラットフォームROS2(Robot Operating System 2)にも対応。世界中で開発された様々なソフトウェアの利用が可能です。

RoboCar 1/10 Pro イメージビデオ

システム構成

搭載センサー

フロントカメラ、前後にLiDARを搭載し、加速度・ジャイロセンサー、エンコーダにより車両の挙動や走行距離の把握が可能です。

また、各種センサー情報の取得、速度・操舵角制御、通信などのライブラリを用意。これらを用いてお客様が自由にアプリケーションを開発することが可能です。

また、CPU性能が大幅にアップし、画像処理を活用した制御開発やディープラーニングを利用したリアルタイム認識処理などが行えます。

また、各種センサー情報の取得、速度・操舵角制御、通信などのライブラリを用意。これらを用いてお客様が自由にアプリケーションを開発することが可能です。

また、CPU性能が大幅にアップし、画像処理を活用した制御開発やディープラーニングを利用したリアルタイム認識処理などが行えます。

搭載センサーについて

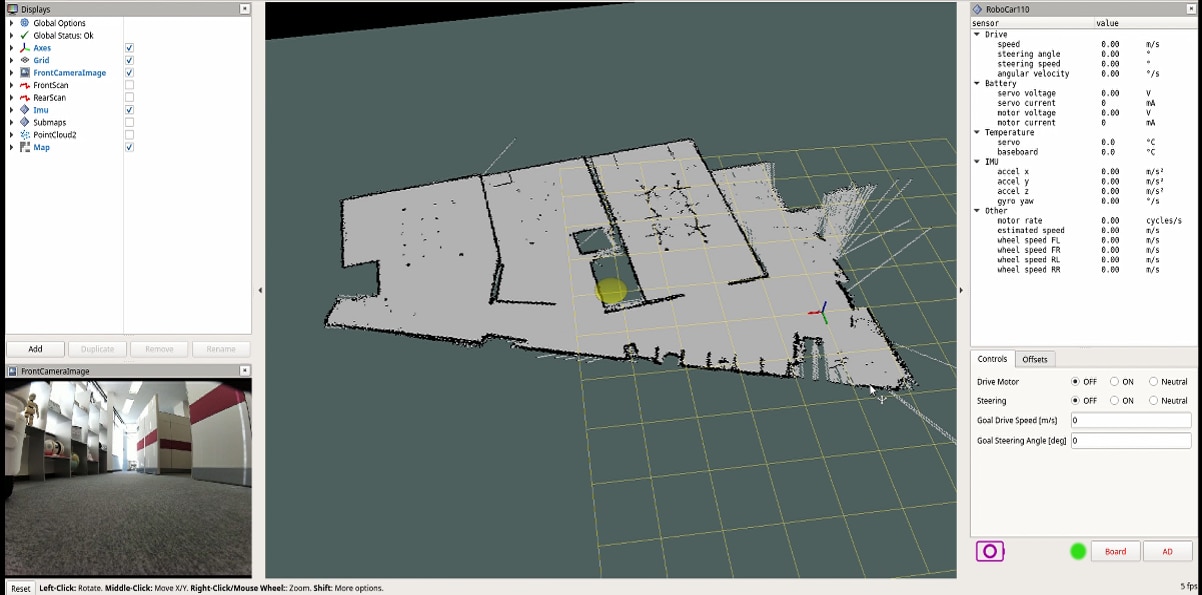

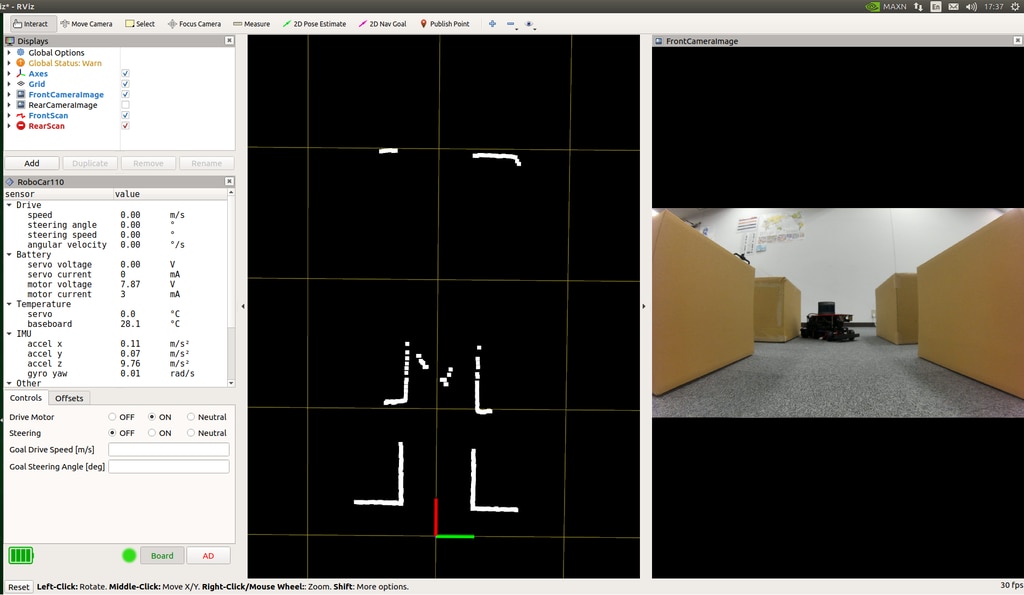

ROS(Robot Operating System)

ROSとは

ROSは、Robot Operating System(ロボットオペレーティングシステム)の略で、OSという名前はついているが、WindowsやLinux、macOS、などのオペレーティングシステムとは異なっており、開発ツールやライブラリが含まれたオープンソースソフトウェアを指しています。

ロボットソフトウェア開発をしている米国の企業、Willow Garage(ウィローガレージ)社が開発し、現在では『OSRF』(Open Source Robotics Foundation)というNPOが管理をしています。

現在では、全世界に公開されており、過去に開発された技術やノウハウを誰でも活用できるようになっていて、大学や研究機関で積極的に活用されている。自動運転開発でのROS活用は開発には適したプラットフォームではあるが、車両制御システムのような安全性が重視される環境で使用するには、多くの重要な機能が欠けているとされていた。

しかし昨今ROSが持つハードウェア抽象化,デバイスドライバ,ライブラリ,視覚化ツール,メッセージ通信,パッケージ管理のすべての機能に加え,追加機能としてセキュリティ,リアルタイム制御,ネットワーク品質制御,複数ロボットの同時利用,商業サポートも備えるように設計されたROS2もリリースされ、自動運転の研究開発でも活用が積極的に検討されている。

現在では、全世界に公開されており、過去に開発された技術やノウハウを誰でも活用できるようになっていて、大学や研究機関で積極的に活用されている。自動運転開発でのROS活用は開発には適したプラットフォームではあるが、車両制御システムのような安全性が重視される環境で使用するには、多くの重要な機能が欠けているとされていた。

しかし昨今ROSが持つハードウェア抽象化,デバイスドライバ,ライブラリ,視覚化ツール,メッセージ通信,パッケージ管理のすべての機能に加え,追加機能としてセキュリティ,リアルタイム制御,ネットワーク品質制御,複数ロボットの同時利用,商業サポートも備えるように設計されたROS2もリリースされ、自動運転の研究開発でも活用が積極的に検討されている。

GitHubにて公開中

GitHub(ギットハブ)とはソフトウェア開発プラットフォームであり、ソースコードをホスティングができる。ホスティングすることにより複数人のソフトウェア開発者と協働してコードをレビューしたり、SNS機能もついておりfeeds、followersとして提供されています。

オープンソースプロジェクト向けのアカウントとしてサンプルコードを、無償提供をしています。

オープンソースプロジェクト向けのアカウントとしてサンプルコードを、無償提供をしています。

RoboCar 1/10 Pro | 充実のサンプルアプリケーション

SLAMサンプルアプリケーション

RoboCar 1/10X『SLAMパッケージ』の紹介動画

【Cartographer】

リアルタイムで、複数のプラットフォームやセンサーを統合化し、2D あるいは3D で自己位置推定とマップ作成を同時に行う(SLAM)を提供しているオープンソースアルゴリズムです。ロボット掃除機、自動フォークリフト、自動運転車、無人偵察機などの自律ロボットの重要なコンポーネントとして活用されています。

2D MAP サンプル

【Hector SLAM】

独国ダルムシュタット工科大学のロボット研究チーム"Hector"のメンバーが開発したROSシステム環境にて利用できるオープンソースのソフトウェアで,都市型捜索救助 (USAR ; UrbanSearch and Rescue) で運用されるロボットのように、未知な環境下での環境地図作成が重要となる場面での使用を想定して開発されたアルゴリズムで、オドメトリに頼らずに6軸の姿勢推定をすることを目的としている。

※ROS2には対応しておりません。

※ROS2には対応しておりません。

2D MAP サンプル

物体検知アプリケーション

【SSD(Single Shot MultiBox Detector)】

機械学習を用いた一般物体検知のアルゴリズム。深層学習の技術を使い、高スピードで多種類の物体を検知できます。特定の物体を覚えさせ、その特定の物体を検知させることができます。

SSDでは"Single Shot"という名前が暗示しているように、1度のCNN演算で物体の「領域候補検出」と「クラス分類」の両方を行います。これにより物体検出処理の高速化を可能にしたアルゴリズムです。

SSDのネットワークは最初のレイヤー(ベースネットワーク)に画像分類に使用されるモデルを用いています。畳み込み層を追加したものが構造になります。

予測の際はそれぞれのレイヤーから特徴マップを抽出して物体検出を行っています。具体的にはそれぞれの特徴レイヤーに3×3の畳み込みフィルタを適用してクラス特徴と位置特徴を抽出しています。

SSDでは"Single Shot"という名前が暗示しているように、1度のCNN演算で物体の「領域候補検出」と「クラス分類」の両方を行います。これにより物体検出処理の高速化を可能にしたアルゴリズムです。

SSDのネットワークは最初のレイヤー(ベースネットワーク)に画像分類に使用されるモデルを用いています。畳み込み層を追加したものが構造になります。

予測の際はそれぞれのレイヤーから特徴マップを抽出して物体検出を行っています。具体的にはそれぞれの特徴レイヤーに3×3の畳み込みフィルタを適用してクラス特徴と位置特徴を抽出しています。

物体検知アプリ 紹介動画

物体検知結果 サンプル

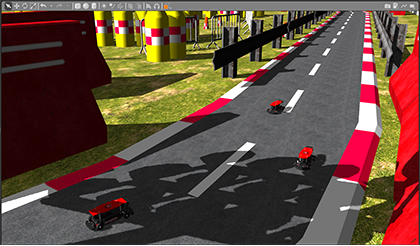

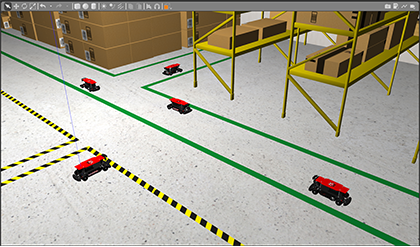

シミュレーターアプリケーション

ROSをベースとしたGazeboシミュレーターと連携をし、RoboCar 1/10 Proのモデルも作成しました。

このサンプルアプリを使用することにより車両の走行挙動やセンサー情報が仮想空間上にて確認することが可能になります。

GazeboシミュレーターとはROSによって動作するロボット向けのバーチャルシミュレーターツールです。ODE、Bulletなどの複数の高性能物理エンジンを使用できます。高品質の照明、影、テクスチャなどの環境のリアルなレンダリングを提供しており、レーザー距離計、カメラ、Kinectスタイルのセンサーなど、シミュレートされた環境をモデル化できます。

Gazeboの使用方法は大きく以下の2つがあります。

Ⅰ.すでに存在するロボットのシミュレーションモデルをインストールしてシミュレーション条件を変更して検証を行う。

Ⅱ.ロボットのモデル構築から全て自身にて定義してシミュレーションを行う。

Ⅰに関しては既にRoboCar 1/10 Proのモデルを構築していますので、すぐに活用することができます。

このサンプルアプリを使用することにより車両の走行挙動やセンサー情報が仮想空間上にて確認することが可能になります。

GazeboシミュレーターとはROSによって動作するロボット向けのバーチャルシミュレーターツールです。ODE、Bulletなどの複数の高性能物理エンジンを使用できます。高品質の照明、影、テクスチャなどの環境のリアルなレンダリングを提供しており、レーザー距離計、カメラ、Kinectスタイルのセンサーなど、シミュレートされた環境をモデル化できます。

Gazeboの使用方法は大きく以下の2つがあります。

Ⅰ.すでに存在するロボットのシミュレーションモデルをインストールしてシミュレーション条件を変更して検証を行う。

Ⅱ.ロボットのモデル構築から全て自身にて定義してシミュレーションを行う。

Ⅰに関しては既にRoboCar 1/10 Proのモデルを構築していますので、すぐに活用することができます。

RoboCar 1/10 Pro シミュレーターアプリ動画

Gazeboアプリ動画

Navigation アプリケーション

NavigationツールとしてSLAMにて作成したMAPを活用して経路を生成しRoboCar 1/10 Proの自動運転走行ができるようになります。

サンプルアプリケーション画面上にてゴール地点を入力すると自動で走行経路の生成。この走行経路生成には"サンプリング運動計画手法"が使用されています。

RRT(Rapidlyexploring Random Tree)やPRM(Probabilistic RoadMap)に代表されるこの手法では、コンフィグレーション空間でランダムにサンプリングし、ロードマップと呼ばれる自由経路のネットワークを構成することにより、グラフ探索を行って初期位置から目的の位置への経路を作成します。

ここ10年で急速な発展を遂げており、オンライン経路再計画システムも構成されており、障害物との干渉が予測される場合にただちに再計画をし干渉のない経路を生成します。

その後生成した経路を使用し、RoboCar 1/10 Proが自動運転走行できるようになります。

※ROS2には対応しておりません。

サンプルアプリケーション画面上にてゴール地点を入力すると自動で走行経路の生成。この走行経路生成には"サンプリング運動計画手法"が使用されています。

RRT(Rapidlyexploring Random Tree)やPRM(Probabilistic RoadMap)に代表されるこの手法では、コンフィグレーション空間でランダムにサンプリングし、ロードマップと呼ばれる自由経路のネットワークを構成することにより、グラフ探索を行って初期位置から目的の位置への経路を作成します。

ここ10年で急速な発展を遂げており、オンライン経路再計画システムも構成されており、障害物との干渉が予測される場合にただちに再計画をし干渉のない経路を生成します。

その後生成した経路を使用し、RoboCar 1/10 Proが自動運転走行できるようになります。

※ROS2には対応しておりません。

Navigationアプリ 紹介動画

シミュレーション環境拡充

Gazeboシミュレーターで使用できる環境を拡充致しました。

サーキット、工場でのシミュレーターワールド 例

新規に追加した環境としては、「工場内、病院、ハウス内、サーキット、書店」の5パターンがご利用可能になりました。

現在自動運転にとどまらず、AMR、AGVの導入の際にもシミュレーター上での開発実施が主流となってきています。各ロボットが想定した動きをできるのか、という点がシミュレーター上でも確認することができ、ロボット・自動運転開発の大幅な短縮に注目が集められています。

また、ロボットなどを導入する前の実証実験なども事前にシミュレーター上にて実施することにより大幅に短縮することが見込まれます。

現在自動運転にとどまらず、AMR、AGVの導入の際にもシミュレーター上での開発実施が主流となってきています。各ロボットが想定した動きをできるのか、という点がシミュレーター上でも確認することができ、ロボット・自動運転開発の大幅な短縮に注目が集められています。

また、ロボットなどを導入する前の実証実験なども事前にシミュレーター上にて実施することにより大幅に短縮することが見込まれます。

マルチカーコントロールシステム

マルチカーコントロールシステムは複数のRoboCar 1/10 Proの遠隔からの監視・操作を実現できます。

無人で走行する自動運転・AMR(Autonomous Mobile Robot)・ロボットにおいては、遠隔からの監視や操作を組み合わせた実装が求められるケースがあります。遠隔監視・操作を前提としたシステムでは、監視者1人にて何台までの車両・ロボットを管理できるかが運用時の採算性に大きな影響を与えるため、上位のロボットコントロールシステムが重要になります。このような研究開発を実際の車両やロボットを用いて実験するには、場所やコストの制約が大きく、シミュレーション環境上での開発も行われていますが、実環境での様々な事象の再現には限界があります。

複数のRoboCar 1/10 Proを1台のPCより遠隔操作が可能になります。操作方法としてはゲームコントローラーを使用した遠隔操作から、サンプルアプリとして提供しているNavigationツールを使用した走行経路生成・経路追従走行も複数台同時に動作させることができ、これにより今までネックであった実車を用いた複数台コントロールの研究開発から実験も容易に実施できるようになります。

無人で走行する自動運転・AMR(Autonomous Mobile Robot)・ロボットにおいては、遠隔からの監視や操作を組み合わせた実装が求められるケースがあります。遠隔監視・操作を前提としたシステムでは、監視者1人にて何台までの車両・ロボットを管理できるかが運用時の採算性に大きな影響を与えるため、上位のロボットコントロールシステムが重要になります。このような研究開発を実際の車両やロボットを用いて実験するには、場所やコストの制約が大きく、シミュレーション環境上での開発も行われていますが、実環境での様々な事象の再現には限界があります。

複数のRoboCar 1/10 Proを1台のPCより遠隔操作が可能になります。操作方法としてはゲームコントローラーを使用した遠隔操作から、サンプルアプリとして提供しているNavigationツールを使用した走行経路生成・経路追従走行も複数台同時に動作させることができ、これにより今までネックであった実車を用いた複数台コントロールの研究開発から実験も容易に実施できるようになります。

マルチカーコントロールアプリ 紹介動画

【その他】

・ステアリング&駆動用モーター制御

・各種センサー値&カメラ画像取得

・レーザーレンジセンサーを用いた障害物回避

・無線Wi-Fiを介したデータ通信

・遠隔操作 etc…

・各種センサー値&カメラ画像取得

・レーザーレンジセンサーを用いた障害物回避

・無線Wi-Fiを介したデータ通信

・遠隔操作 etc…

サンプルアプリイメージ

製品仕様

| ー-分類 | 項目 | 仕様 |

|---|---|---|

| 本体 |

サイズ・重量 | 190×429×153 [mm] ・ 3.0 [kg] |

| 最大積載重量 | 1kg | |

| 最小回転半径 | 約500 [mm] | |

| 最高速度 | 約10 [km/h] | |

| シャシー・フレーム | アルミシャシー・ダブルウィッシュボーンサスペンション・ZMP製アルミフレーム | |

| モーター | 駆動用:小型DCモーター / ステアリング用:ロボット用サーボモーター | |

| バッテリー |

制御部バッテリー (オプション):専用Li-ionバッテリーパック(×1) 駆動部バッテリー:ニッケル水素バッテリーパック(7.2 [V] ×1) |

|

| 搭載センサー |

単眼USBカメラ×1(前方):1920×1080 [RAW]、60 [fps]、139 [deg]、CMOSイメージセンサー搭載 レーザレンジセンサー×2(前後):検知距離20~5,600 [mm]、240 [deg] ジャイロ(1軸)、加速度(3軸)、ロータリエンコーダー(車輪×4、モーター×1、ステアリング×1) |

|

|

車載CPU |

NVIDIA Jetson AGX Orin (12-core ARM v8.2 64-bit) GPU:2048-core Ampere architecture GPU with 64 Tensor Cores、RAM:32GB、SSD:64GB |

|

| WIFI | IEEE802.11b/g/n/ac WEP/WPA、2.4GHz/5GHz | |

| 本体側ソフトウェア |

OS | Linux(Ubuntu 20.04) |

| 対応ライブラリ |

ROS、ROS2、CUDA cuDNN、TensorFlow、PyTorch、OpenCV、PCL |

|

| サンプルプログラム | 車両制御、センサー情報取得、LAN通信、LRFによる障害物回避、遠隔操作、SLAM(Hector, Cartographer) | |

| 付属品 | ジョイスティックコントローラー、制御用/駆動用バッテリー充電器 | |

※本製品仕様は予告なく変更の場合がございます。

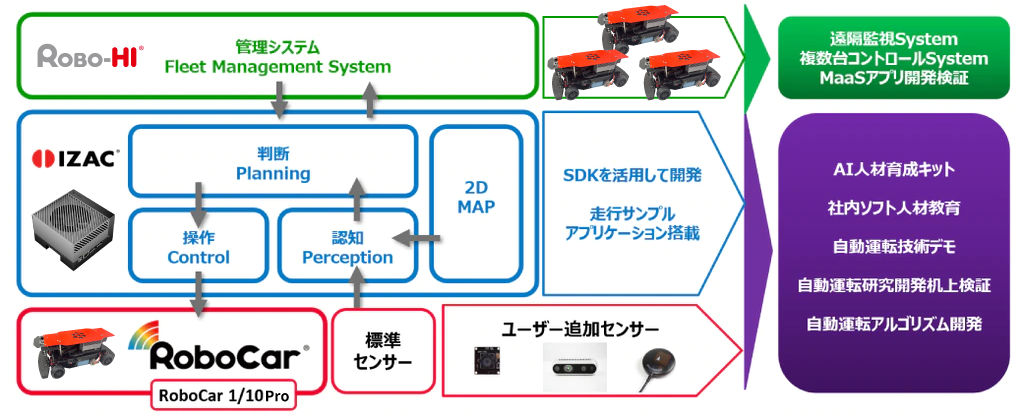

拡張性能&システム連携例

RoboCar 1/10 Proをハードウェアプラットフォームとして活用し、標準センサーや追加センサーを搭載した制御開発が行えます。

また、通信機能を活用した複数台制御や車両と周辺環境(信号や外部装置等)と連携させる路車間連携システム開発、遠隔監視システムの開発への活用可能です。

また、通信機能を活用した複数台制御や車両と周辺環境(信号や外部装置等)と連携させる路車間連携システム開発、遠隔監視システムの開発への活用可能です。

RoboCar 1/10 Proのシステム構成とユースケース

RoboCar 1/10シリーズ導入事例

RoboCar 1/10シリーズは、2009年の販売開始以降、教育機関で300台以上ご導入頂いております。

導入実績の詳細は下記リンクよりご確認ください。

導入実績の詳細は下記リンクよりご確認ください。

価格

【販売価格】

通常価格 180万円(税別)/アカデミック価格 162万円(税別)(ソフトウェア開発環境(SDK)付属)

【レンタル価格】

15万円(税別)/月 ※期間としては1ヶ月から対応致します。

製品カタログダウンロード

RoboCar 1/10 Pro サポート

RoboCar 1/10 Proのマニュアルやソフトウェアアップデート、オプションをご覧いただけるページです。

※ご利用にはIDとPasswordが必要となります。

※ご利用にはIDとPasswordが必要となります。

製品の問い合わせ

下記より、製品購入前の問い合わせ・製品デモ依頼など受け付けております。

関連製品

© ZMP INC. All Rights Reserved.