ステレオカメラについて動画でご紹介!

ステレオカメラ(ステレオビジョン)について

ADAS(先進運転支援システム)においてステレオカメラ(ステレオビジョン)は前方の車間距離や取得した画像を使い、自動ブレーキや白線認識などの運転支援に活用されるデバイスです。

近年は量産車両にも搭載され、自動車を直接制御するためのセンサー機能を果たすようになりました。本ページではステレオカメラについて、その機器の役割、構造、原理、計算アルゴリズム、画像処理ソフトウェア(OpenCV)などについて解説します。また、ZMPが提供する研究・開発に活用可能なステレオカメラユニット関連製品についてもご紹介します。

近年は量産車両にも搭載され、自動車を直接制御するためのセンサー機能を果たすようになりました。本ページではステレオカメラについて、その機器の役割、構造、原理、計算アルゴリズム、画像処理ソフトウェア(OpenCV)などについて解説します。また、ZMPが提供する研究・開発に活用可能なステレオカメラユニット関連製品についてもご紹介します。

なお、下記が本ページの項目となっております。ページ参照時にご活用ください。

1. ステレオカメラとは

ステレオカメラ(すてれおかめら、stereo camera)とは、人が物を見る原理と同じように、2つのカメラ(2眼のカメラ)を用いて対象物を複数の異なる方向から同時に撮影することにより、カメラの画素の位置情報から、奥行き方向の情報も計測することが可能なカメラのことです。

ステレオカメラはステレオビジョンやステレオカメラシステム、ステレオカメラユニット、ステレオデジタルカメラなどとも呼ばれることがあります。

ステレオカメラはステレオビジョンやステレオカメラシステム、ステレオカメラユニット、ステレオデジタルカメラなどとも呼ばれることがあります。

図.1 ステレオカメラ RoboVision2s

2.ステレオカメラの特徴

ステレオカメラとは、赤外線やミリ波レーダーに並ぶ、距離センサの一種で、人間が物を見るときの三角測量の原理を応用したステレオ方式のデジタルカメラです。

そのためステレオカメラの特徴としては人間の目と同じで、2台のカメラ(ステレオ方式)で撮影した画像(イメージ)を奥行きも含め立体的に見ます。

そのためステレオカメラの特徴としては人間の目と同じで、2台のカメラ(ステレオ方式)で撮影した画像(イメージ)を奥行きも含め立体的に見ます。

その特徴として、

1. ステレオカメラはあらゆる距離の物体を検出できる

→計算アルゴリズムと物体のマッチングができれば対象物までの距離が計測でき、画像の情報を活用することで認識処理も可能です。

2.ステレオカメラは近い対象物の距離精度が高い

→後述する奥行き情報の演算結果より、近傍の対象物の計測精度が高い特徴がございます。

3.3次元計測でカメラからの絶対距離が取得可能

→2つのカメラを使った距離計測(距離測定)を行うため、カメラから何mかといった、絶対距離で計測が出来る。

4.複数のカメラを組み合わせることで検出範囲が拡大できる

→複数カメラを使い全周囲の距離や画像認識が行え、移動体の死角を少なくできます。

5.夜間や雪、雨などの環境変化へのロバスト性が高い

→画像処理により雨や雪、夜間の走行においても安定した測距結果を出力

といった特徴があります。

1. ステレオカメラはあらゆる距離の物体を検出できる

→計算アルゴリズムと物体のマッチングができれば対象物までの距離が計測でき、画像の情報を活用することで認識処理も可能です。

2.ステレオカメラは近い対象物の距離精度が高い

→後述する奥行き情報の演算結果より、近傍の対象物の計測精度が高い特徴がございます。

3.3次元計測でカメラからの絶対距離が取得可能

→2つのカメラを使った距離計測(距離測定)を行うため、カメラから何mかといった、絶対距離で計測が出来る。

4.複数のカメラを組み合わせることで検出範囲が拡大できる

→複数カメラを使い全周囲の距離や画像認識が行え、移動体の死角を少なくできます。

5.夜間や雪、雨などの環境変化へのロバスト性が高い

→画像処理により雨や雪、夜間の走行においても安定した測距結果を出力

といった特徴があります。

3.ステレオカメラの用途

ステレオカメラは、自動車(車)の分野では、車に搭載し、車載カメラとして先進運転支援システムのセンサー(代表例としては自動車の衝突を未然に自動で防止(回避)する「先進運転支援システム(ADAS)センサー」)として活用されていますが、その他の産業においては、建機の周辺の人や障害物を認識するセンサーとしてや、道路の路面検査などセンサー(据え置き型のセンサー)として活用されております。

ほかにも、産業用ロボットの周辺環境認識、重なった人間でもカウント可能なためイベントや店舗内の来場者の動線分析、セキュリティや警備の目的でステレオカメラのシステムが活用されるケースがあります。

そのほか産業界において、ステレオカメラは、目で視る物体認識の代替手段として、幅広い日本のメーカー(会社)の製品に応用(搭載)されています。

またZMPの自動運転開発車両RoboCarにおいてもステレオ画像による障害物認識、前車追従機能を搭載し、センサーフュージョンの技術研究や開発を行っています。

ほかにも、産業用ロボットの周辺環境認識、重なった人間でもカウント可能なためイベントや店舗内の来場者の動線分析、セキュリティや警備の目的でステレオカメラのシステムが活用されるケースがあります。

そのほか産業界において、ステレオカメラは、目で視る物体認識の代替手段として、幅広い日本のメーカー(会社)の製品に応用(搭載)されています。

またZMPの自動運転開発車両RoboCarにおいてもステレオ画像による障害物認識、前車追従機能を搭載し、センサーフュージョンの技術研究や開発を行っています。

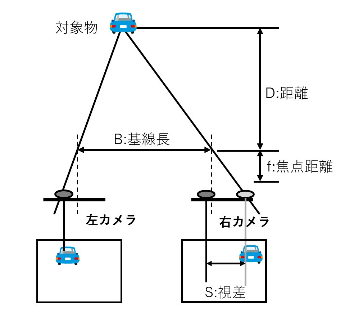

4.ステレオカメラの原理

ステレオカメラでの計測を実現するためには,いずれも検知した対象物までの距離を算出する必要があります。

ここでは,ステレオカメラにおける距離計測の手法について説明します。

ステレオカメラで対象物までの距離を求めるには,図に示すように三角測量の原理を利用して算出しており。

同じ対象物を 2 つのカメラで撮像した際の撮像位置の差分(視差)を求める必要があります。

ここで視差を求めるには,2 つのカメラで同じ対象物を撮像した画素を抽出する必要があり,これをステレオマッチングと呼んでいます。

ここでは,ステレオカメラにおける距離計測の手法について説明します。

ステレオカメラで対象物までの距離を求めるには,図に示すように三角測量の原理を利用して算出しており。

同じ対象物を 2 つのカメラで撮像した際の撮像位置の差分(視差)を求める必要があります。

ここで視差を求めるには,2 つのカメラで同じ対象物を撮像した画素を抽出する必要があり,これをステレオマッチングと呼んでいます。

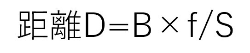

4-1.ステレオマッチングとは

ステレオマッチングとは、2枚の画像の各部についてマッチングを行うことで視差を推定する方法です。

視差とは,2枚の画像間で対応する部位の位置の差を表します.ステレオマッチングを行い画像上の各部位の視差が推定できれば,三角測量の原理に基づいて距離を算出できます。

視差とは,2枚の画像間で対応する部位の位置の差を表します.ステレオマッチングを行い画像上の各部位の視差が推定できれば,三角測量の原理に基づいて距離を算出できます。

4-2.三角測量とは

三角測量とは、三角形の原理を使って離れた地点との距離を計測する手法が三角測量と呼ばれています。

具体的には、ある基線の両端にある既知の点から測定したい点への角度をそれぞれ測定することによって、その点の位置を決定する三角法および幾何学を用いた測量方法のことで、ある2点間の正確な距離が分かっている場合、その2点から離れた場所のある地点との距離は、その2点との角度が分かれば「三角形の一辺とその両端角が分かれば三角形が確定する」という性質によって距離が求められています。

具体的には、ある基線の両端にある既知の点から測定したい点への角度をそれぞれ測定することによって、その点の位置を決定する三角法および幾何学を用いた測量方法のことで、ある2点間の正確な距離が分かっている場合、その2点から離れた場所のある地点との距離は、その2点との角度が分かれば「三角形の一辺とその両端角が分かれば三角形が確定する」という性質によって距離が求められています。

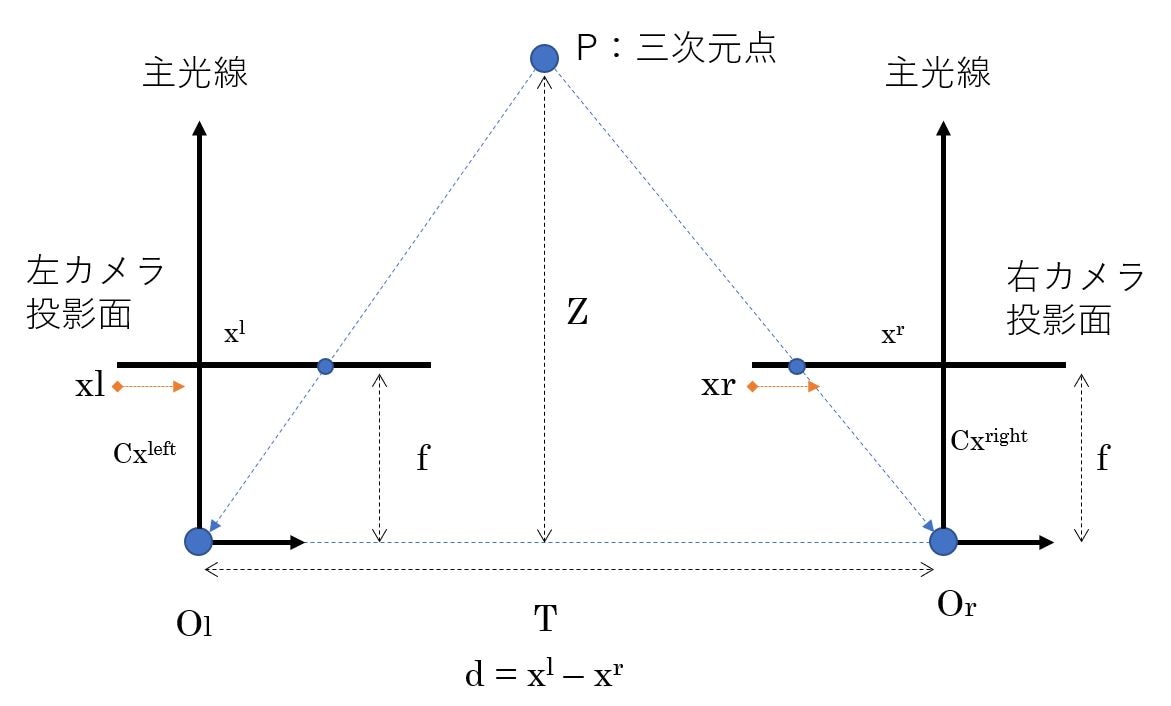

図.2 三角測量計算イメージ

ステレオカメラにおいては、下記の式から基線長の長さ、焦点距離、撮像位置の差分がわかっていることから、対象物までの距離を算出することが可能となります。

5. ステレオカメラの距離計測

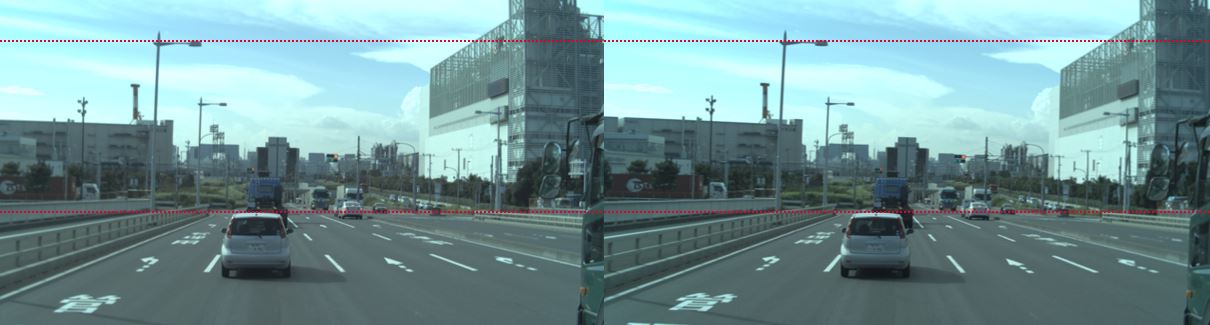

ステレオカメラの距離計測の手法の一つに左右の画像間で、ずれ量を計測するための方法の一つにブロックマッチングと呼ばれる方法についてここでは紹介していきます。

これは、一方の画像(左画像) のある点に注目し、その周囲数ピクセルの矩形をブロックとし、もう一方の画像からそのブロックともっとも相関のある位置を探しだす方法です。

ここで左右の画像が、ゆがみなく、上下方向のずれや、光軸のずれなどがなく、真に左右のみの平行移動分のずれであるとすると、同一の対象物は画像中でも同じY 座標に表れるはずなので、ブロックの相関を検索する対象は、同じY においてX 方向にのみずらしていけばよくなり、この検査で最も相関の高かった位置(ずれ)を視差値とする計算方法となります。

これは、一方の画像(左画像) のある点に注目し、その周囲数ピクセルの矩形をブロックとし、もう一方の画像からそのブロックともっとも相関のある位置を探しだす方法です。

ここで左右の画像が、ゆがみなく、上下方向のずれや、光軸のずれなどがなく、真に左右のみの平行移動分のずれであるとすると、同一の対象物は画像中でも同じY 座標に表れるはずなので、ブロックの相関を検索する対象は、同じY においてX 方向にのみずらしていけばよくなり、この検査で最も相関の高かった位置(ずれ)を視差値とする計算方法となります。

図3. ブロックマッチングのイメージについて

5-1.計算アルゴリズムについて

また、ステレオカメラの距離計測は、いくつかの計算アルゴリズムに分けて処理が行われているためその計算アルゴリズムについて簡単に下記で紹介します。

1.前処理 :歪み補正(キャリブレーション)や画像の輝度値の正規化など

2.平行化 :マッチングの効率化のための画像変換

3.マッチング :マッチングを行って視差を推定

4.三角測量(Triangulation):カメラの幾何的な配置から、視差マップを距離に変換

上記のようなステップにより視差を計測し距離を算出します。

2.平行化 :マッチングの効率化のための画像変換

3.マッチング :マッチングを行って視差を推定

4.三角測量(Triangulation):カメラの幾何的な配置から、視差マップを距離に変換

上記のようなステップにより視差を計測し距離を算出します。

5-1-1 歪み補正(キャリブレーション)について

画像処理の前処理として、カメラの歪みを補正します。カメラのレンズは歪みを持っているため曲がっているため、数学的にレンズの半径方向の歪みと円周方向の歪みを取り除きます。

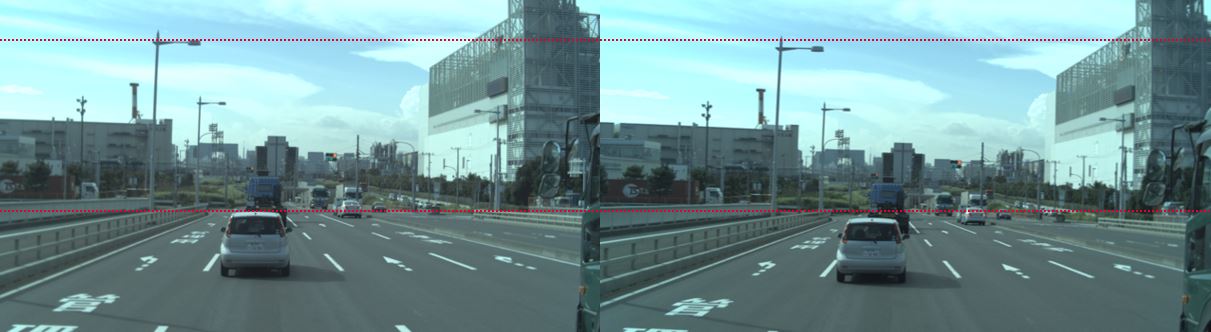

図 歪み補正前画像(左)と歪み補正後画像(右)

5-1-2 平行化(rectification)処理について

平行化処理では、カメラ間の確度と距離を調整し、計測した2つの画像の対応する点が同じ行座標をもつようにする方法です。

2つの画像平面が同じ平面上にあり、画像の行が正確にそろっている状態にします。

この処理は、マッチング処理において、同じ画像を探す処理が、2 次元の探索問題から1 次元の問題になるため、ステレオビジョン(ステレオカメラ)の処理の効率化に必須の作業といえます。ステレオイメージの平行化は視差計算やアナグリフ画像作成の前処理手順としてよく使用されています。

2つの画像平面が同じ平面上にあり、画像の行が正確にそろっている状態にします。

この処理は、マッチング処理において、同じ画像を探す処理が、2 次元の探索問題から1 次元の問題になるため、ステレオビジョン(ステレオカメラ)の処理の効率化に必須の作業といえます。ステレオイメージの平行化は視差計算やアナグリフ画像作成の前処理手順としてよく使用されています。

図 平行化処理前画像

図 平衡化処理後画像

5-1-3 マッチングについて

2枚の画像の各部についてマッチングを行うことで視差を推定する方法です。ここで視差とは,2枚の画像間で対応する部位の位置の差を表します。

ステレオマッチングを行い画像上の各部位の視差が推定できれば,三角測量の原理に基づいて距離を算出できます。マッチングの中では、ステレオ対応点検索(2つの異なるカメラ画像での同一点の検索)を行っています。対応点の検索においては様々なマッチングアルゴリズムが存在します。

下記に説明するコンピュータビジョンのプログラミングライブラリのOpenCVでは、高速で効果的なブロックマッチングステレオアルゴリズムが実装されており、マッチングのイメージとしては、同じ平面上の画像にウィンドウを設定しその中の差分の和が小さくなる点を対応点(差分絶対値和(SAD:Sum of Absolute Difference))として探すアルゴリズムです。

マッチングアルゴリズムの処理イメージについて説明すると、

上記のブロックマッチングステレオ対応点探索アルゴリズムには3つのステップがあります。

1. 画像の明るさを正規化しテクスチャを強調するために、事前フィルタリングする。

2. SADウィンドウを用いて水平なエピポーラ線に沿って対応点を探索する。

3. 不良な対応点を削除するために事後フィルタリングを行う。

事前フィルタリングの段階では、マッチングを効率的に行うために画像の明るさやテクスチャを強調するために入力画像を正規化します。

次の対応点の検索はSADウィンドウ(基準となるピクセルから視差探索の範囲)をスライドすることで行われます。左カメラ画像内でそれぞれの特徴に対して、右カメラ画像で対応する行から最もよくマッチするものを探します。

平行化すると、各行がエピポーラ線になるので、右カメラの画像内でマッチングする場所は、左カメラ画像内の同じ行(同じy座標)にあると仮定できます。また、ステレオカメラでは平行に取付されているため、視差がゼロであれば同じ点(x0)になり、それより大きな視差なら画像の左側になります。(下図参照)

事後のフィルタリングについては、左右の視差の値を見て視差の値が整合するかをチェックするなど、異常値や不良な対応点を削除する処理を行います。

ステレオマッチングを行い画像上の各部位の視差が推定できれば,三角測量の原理に基づいて距離を算出できます。マッチングの中では、ステレオ対応点検索(2つの異なるカメラ画像での同一点の検索)を行っています。対応点の検索においては様々なマッチングアルゴリズムが存在します。

下記に説明するコンピュータビジョンのプログラミングライブラリのOpenCVでは、高速で効果的なブロックマッチングステレオアルゴリズムが実装されており、マッチングのイメージとしては、同じ平面上の画像にウィンドウを設定しその中の差分の和が小さくなる点を対応点(差分絶対値和(SAD:Sum of Absolute Difference))として探すアルゴリズムです。

マッチングアルゴリズムの処理イメージについて説明すると、

上記のブロックマッチングステレオ対応点探索アルゴリズムには3つのステップがあります。

1. 画像の明るさを正規化しテクスチャを強調するために、事前フィルタリングする。

2. SADウィンドウを用いて水平なエピポーラ線に沿って対応点を探索する。

3. 不良な対応点を削除するために事後フィルタリングを行う。

事前フィルタリングの段階では、マッチングを効率的に行うために画像の明るさやテクスチャを強調するために入力画像を正規化します。

次の対応点の検索はSADウィンドウ(基準となるピクセルから視差探索の範囲)をスライドすることで行われます。左カメラ画像内でそれぞれの特徴に対して、右カメラ画像で対応する行から最もよくマッチするものを探します。

平行化すると、各行がエピポーラ線になるので、右カメラの画像内でマッチングする場所は、左カメラ画像内の同じ行(同じy座標)にあると仮定できます。また、ステレオカメラでは平行に取付されているため、視差がゼロであれば同じ点(x0)になり、それより大きな視差なら画像の左側になります。(下図参照)

事後のフィルタリングについては、左右の視差の値を見て視差の値が整合するかをチェックするなど、異常値や不良な対応点を削除する処理を行います。

図 画像探索イメージ

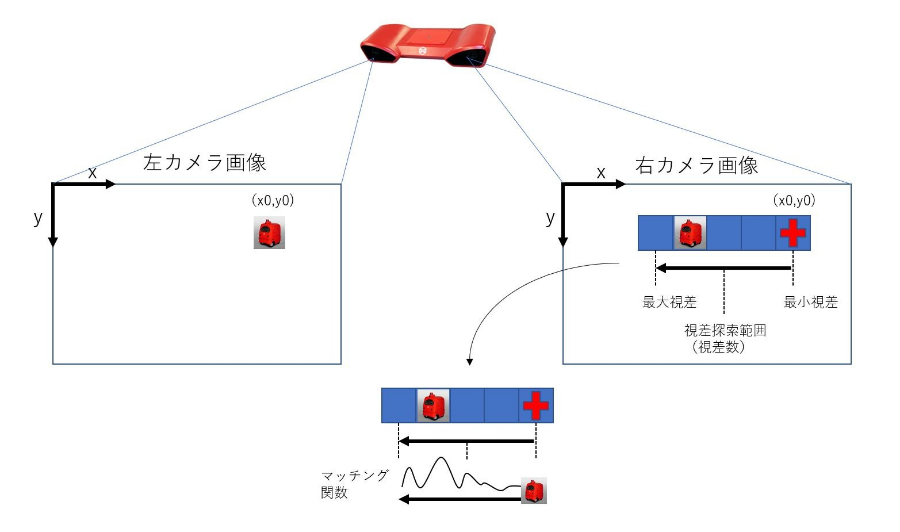

5-1-3-1 エピポーラ線とは

上記に度々出てきましたエピポーラ線について説明しますと、エピポーラ幾何という、2つのカメラで3次元空間を撮影する、ステレオビジョンに関する幾何の点を結んだ線のことになります。

エピポーラ幾何は2つの異なる位置から見た画像から、3次元の奥行き情報を復元したり、画像間の対応を求めたりするのに役立つ幾何です。

エピポーラ幾何は2つの異なる位置から見た画像から、3次元の奥行き情報を復元したり、画像間の対応を求めたりするのに役立つ幾何です。

図 エポピーラ幾何について

エピポーラ幾何を説明する前提として、

・3次元空間上に存在する点Pが2つのカメラの投影面(Left view, Right view)に、投影(透視投影)されているとする。

・OlとOrは、2つのカメラの投影中心。

・点plとprは、各投影面上における点Pの投影。

として説明を進めます。

■ エピポール

・2つのカメラは異なる位置にあるので、一方のカメラから他方のカメラを見ることができるとすると、それぞれ、elとerに投影されます。これをエピポール(epipole)またはエピポーラ点(epipolar point)と呼びます。

・el、erおよびOl、Orは3次元空間上の同一直線の上に乗る特徴があります。

■ エピポーラ線

エピポーラ線は投影面上に書ける線のことで、投影点から対応するエピポールへの線の事を言います。

直線 Ol-Pは、左のカメラでは1つの点に投影され、その投影面に存在する点pl、右カメラでは直線Or-Pと右カメラの投影面に存在する点をprとしたとき、右のカメラの直線 er – prはエピポーラ線(epipolar line)と呼びます。(左のカメラでは直線el-plがエピポーラ線となります。)

この、エピポーラ線は点Pの3次元空間位置によって一意に定まり、すべてのエピポーラ線はエピポーラ点(図ではel、er)を通る特徴があります。

・また逆に、エピポーラ点を通る直線は、すべてエピポーラ線となる特徴があります。

■ エピポーラ面

・点P, Ol, Orの3点を通る平面はエピポーラ面と呼びます。

・エピポーラ面と、投影面の交線はエピポーラ線と一致します。(エピポーラ線上にはエピポーラ点が存在します。)

■ エピポーラ制約

2つのカメラの互いの位置関係が既知の時に、次のことがいえます。

・点Pの左カメラでの投影plが与えられると、右カメラのエピポーラ線 er - prが定義されます。そして、点Pの右カメラでの投影prは、このエピポーラ線上のどこかにことになります。これをエピポーラ制約(epipolar constraint)と呼びます。

・つまり、2つのカメラで同じ点を捕捉しているとした場合、必ずそれは互いのエピポーラ線上に乗るはずです。

・そのため、一方のカメラで見ている点が、他方のカメラのどこに映っているか、という問題に対しては、エピポーラ線上を調べればよくなり、相当な計算量の節約につながります。

・もし、対応付けが正しくて、plとprの位置がわかっているのであれば、点Pの3次元空間での位置を決定することが可能になります。

・3次元空間上に存在する点Pが2つのカメラの投影面(Left view, Right view)に、投影(透視投影)されているとする。

・OlとOrは、2つのカメラの投影中心。

・点plとprは、各投影面上における点Pの投影。

として説明を進めます。

■ エピポール

・2つのカメラは異なる位置にあるので、一方のカメラから他方のカメラを見ることができるとすると、それぞれ、elとerに投影されます。これをエピポール(epipole)またはエピポーラ点(epipolar point)と呼びます。

・el、erおよびOl、Orは3次元空間上の同一直線の上に乗る特徴があります。

■ エピポーラ線

エピポーラ線は投影面上に書ける線のことで、投影点から対応するエピポールへの線の事を言います。

直線 Ol-Pは、左のカメラでは1つの点に投影され、その投影面に存在する点pl、右カメラでは直線Or-Pと右カメラの投影面に存在する点をprとしたとき、右のカメラの直線 er – prはエピポーラ線(epipolar line)と呼びます。(左のカメラでは直線el-plがエピポーラ線となります。)

この、エピポーラ線は点Pの3次元空間位置によって一意に定まり、すべてのエピポーラ線はエピポーラ点(図ではel、er)を通る特徴があります。

・また逆に、エピポーラ点を通る直線は、すべてエピポーラ線となる特徴があります。

■ エピポーラ面

・点P, Ol, Orの3点を通る平面はエピポーラ面と呼びます。

・エピポーラ面と、投影面の交線はエピポーラ線と一致します。(エピポーラ線上にはエピポーラ点が存在します。)

■ エピポーラ制約

2つのカメラの互いの位置関係が既知の時に、次のことがいえます。

・点Pの左カメラでの投影plが与えられると、右カメラのエピポーラ線 er - prが定義されます。そして、点Pの右カメラでの投影prは、このエピポーラ線上のどこかにことになります。これをエピポーラ制約(epipolar constraint)と呼びます。

・つまり、2つのカメラで同じ点を捕捉しているとした場合、必ずそれは互いのエピポーラ線上に乗るはずです。

・そのため、一方のカメラで見ている点が、他方のカメラのどこに映っているか、という問題に対しては、エピポーラ線上を調べればよくなり、相当な計算量の節約につながります。

・もし、対応付けが正しくて、plとprの位置がわかっているのであれば、点Pの3次元空間での位置を決定することが可能になります。

5-1-4 三角測量(Triangulation)について

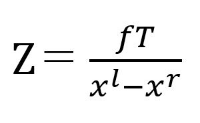

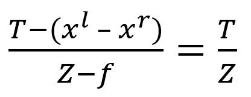

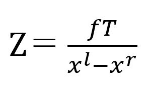

カメラの幾何的な配置がわかっている場合、三角測量の原理で視差マップを距離に変換します。式で記述すると下式で奥行き:Zは表せますが、計算時のイメージとしては下の図を用いて説明します。

図に示すように、歪み補正、平行化処理が正確に行われたステレオカメラがあるという前提とします。その時には、その画像平面が正確に同一平面上にあり、正確に平行な光軸(光軸は投影中心Oから主点cを通る光線で主光線とも呼びます)を持ち、同じ焦点距離fを持つ2つのカメラという構成です。

ここでは、主点CxleftとCxrightがキャリブレーションされ、それぞれ左と右の画像内で同じピクセル座標を持つとします。さらに、これらの画像の行がそろっており、1つのカメラ内にあるすべてのピクセルの行はもう1つのカメラの対応する行と正確にそろっていると仮定した場合に、実世界の点Pが左右の画像ビューの中に存在し、その水平座標として,xlとxrを持つとします。

この時、視差はd=xl-xrで定義され、三角測量の原理で奥行きZを導出することが出来ます。

この時、視差はd=xl-xrで定義され、三角測量の原理で奥行きZを導出することが出来ます。

から

と求められます。

上記の式から、奥行きは視差に反比例するため、視差が0に近い場合(遠方の対象物の場合)には、奥行きが大きく変化し、視差が大きい場合(近傍の対象物の場合)には、視差は少し違っていても奥行きに対する影響が小さくなる特徴があります。そのため、特にカメラに比較的近い対象物に対して、ステレオカメラシステムは高い分解能を発揮します。

5-2. 計算プロセッシングユニットについて

画像処理においては、PC(パソコン)ベースでの処理においては、画像処理コンピュータで有名なNVIDIAのGPU(グラフィック・プロセッシング・ユニット)やIntel Core i7やi5などのCPU(Central Processing Unit)などが活用されます。

GPUは画像処理用に特化し開発されているためCPUよりも処理が効率的に行うことが可能です。また、用途を限定する場合にはFPGA (Field Programmable Gate Array) を用いて画像処理を行うケースもあります。FPGAはインテル(Intel)が買収したアルテラ(altera)という会社が有名です。

そのFPGAとは,回路構成をプログラミングで設計することができる LSI のことであり、プログラミングで回路を構成するため,チップ内部の回路構成を変更することが可能で、特定の用途に合わせてプログラムの作りこみを行うことができ、コスト・開発期間など面で活用されるケースがあります。

GPUは画像処理用に特化し開発されているためCPUよりも処理が効率的に行うことが可能です。また、用途を限定する場合にはFPGA (Field Programmable Gate Array) を用いて画像処理を行うケースもあります。FPGAはインテル(Intel)が買収したアルテラ(altera)という会社が有名です。

そのFPGAとは,回路構成をプログラミングで設計することができる LSI のことであり、プログラミングで回路を構成するため,チップ内部の回路構成を変更することが可能で、特定の用途に合わせてプログラムの作りこみを行うことができ、コスト・開発期間など面で活用されるケースがあります。

5-3. 画像処理プログラミング(Open CV)について

画像処理プログラミングについては、さまざまな種類がありますが、OpenCV(正式名称: Open Source Computer Vision Library)は、オープンソースのコンピューター・ビジョン・ライブラリです。ライブラリーはCとC++で書かれており、コンピューターで画像や動画を処理するために必要な、さまざま機能が実装されており、BSDライセンスで配布されていることから学術用途だけでなく商用目的でも利用できます。

加えて、マルチプラットフォーム対応されているため、幅広い場面で利用されていることが特徴です。

OpenCVの特徴としては、様々な環境で活用が可能で、画像処理・画像解析および機械学習等の機能を持つC/C++、Java、Python、MATLAB用ライブラリ。プラットフォームとしてmacOSやFreeBSD等全てのPOSIXに準拠したUnix系OS、Linux、Windows、Android、iOS等をサポートしている特徴があります。

汎用的な画像処理プログラミング環境を適用することで、ステレオビジョンシステムへの応用やステレオビジョンアルゴリズムの組み込みシステムの開発が容易となり、開発時間の短縮や開発製品の価格抑制に影響を与えます。

加えて、マルチプラットフォーム対応されているため、幅広い場面で利用されていることが特徴です。

OpenCVの特徴としては、様々な環境で活用が可能で、画像処理・画像解析および機械学習等の機能を持つC/C++、Java、Python、MATLAB用ライブラリ。プラットフォームとしてmacOSやFreeBSD等全てのPOSIXに準拠したUnix系OS、Linux、Windows、Android、iOS等をサポートしている特徴があります。

汎用的な画像処理プログラミング環境を適用することで、ステレオビジョンシステムへの応用やステレオビジョンアルゴリズムの組み込みシステムの開発が容易となり、開発時間の短縮や開発製品の価格抑制に影響を与えます。

6.ステレオカメラの距離精度

ステレオカメラによる距離計測の精度はいくつかの要因により影響を受けます、

6-1.カメラの搭載位置

精度のよい画像を計測するためには2つのカメラの基線長(カメラ間の距離)と取付け(搭載)位置の関係が重要となります。カメラの位置は冶具などを使った機械的に拘束し、取付位置を担保する方法や取り付け後のキャリブレーション(校正)作業によりソフト的に抑えることも可能です。カメラのマウント位置は、温度や振動などの影響を受け、時間の経過とともにズレが発生し、撮影した写真の視差情報の密度と距離情報の精度が低下していく可能性があり、その対処方法として、自動校正でこの精度を維持するという対策があります。

6-2.レンズの歪み

カメラのレンズはきれいな曲面を描いているように見えますが、それぞれのバラツキを持った状態で製造されます。これにより画像(イメージ)の歪みが発生し、マッチングが成立せずに視差が算出できないことが発生します。ソフト的な補正により画像(イメージ)の歪みを抑えることでステレオマッチング処理でカメラから物体までの距離を正確に測定できるような対応を行うことが一般的です。

6-3.レンズの解像力

レンズの解像力は単位面積にどれだけの情報を描写できるかを意味する言葉です。例えばボーダーのような白地に横線が平行に何本も引いてある被写体の場合、線と線が明らかに離れていると判別できる程度に描写できていれば解像していると言えますが線と線がボヤけた描写でもはや線が引いてあると判別できないのであれば、線と線の密度がレンズの解像力を超えているという状況になり、レンズがぼやけることにより左右の画像のマッチングの精度が低下し、距離計測の精度が低下(計測誤差が増加)する可能性があります。

6-4.センサーの解像力

撮像素子の解像能力もレンズと同様の概念となり、撮像画像センサーの単位面積に計測された像をどれだけ細かい単位でデータ化する事が出来るかが重要となります。素子は画素の集合体なので、単位面積の画素密度がセンサーの解像性能の指標となり、画像処理の負荷や求める精度によりますが、より素子の密度が高く、画素数の大きいものが望ましいです。

7.ステレオカメラの画像処理

車載向けステレオカメラRoboVision2sのソフトウェアの特長として、明るさの異なる画像を組み合わせることにより明暗さの状況でも像をはっきりと撮影が可能なWDR(Wide Dynamic Range)機能や、そのほかのステレオカメラの画像処理として、ZMPとして取り組んでいるアルゴリズムは、物体検出とVirtual Tilt Stereoというアルゴリズムが存在します。

7-1. WDR(Wide Dynamic Range)機能

WDR(ワイドダイナミックレンジ)とは、暗い画像と明るい画像を処理して暗部を明るく、明部を暗くすることで、適度な明るさの画像を作り出す機能のことです。

例えば、トンネルの出口などの明るい屋外を暗いトンネル内から撮影する場合など、明部と暗部が混在する画面では暗い部分にピントが合ってしまい、明るい部分が白抜けして映像が確認できないといった問題があります。

ワイドダイナミックレンジを搭載したカメラで撮影した場合、明部と暗部を分けて記録した後合成することによって、明るい部分・暗い部分のどちらも鮮明な映像を録画できます。

例えば、トンネルの出口などの明るい屋外を暗いトンネル内から撮影する場合など、明部と暗部が混在する画面では暗い部分にピントが合ってしまい、明るい部分が白抜けして映像が確認できないといった問題があります。

ワイドダイナミックレンジを搭載したカメラで撮影した場合、明部と暗部を分けて記録した後合成することによって、明るい部分・暗い部分のどちらも鮮明な映像を録画できます。

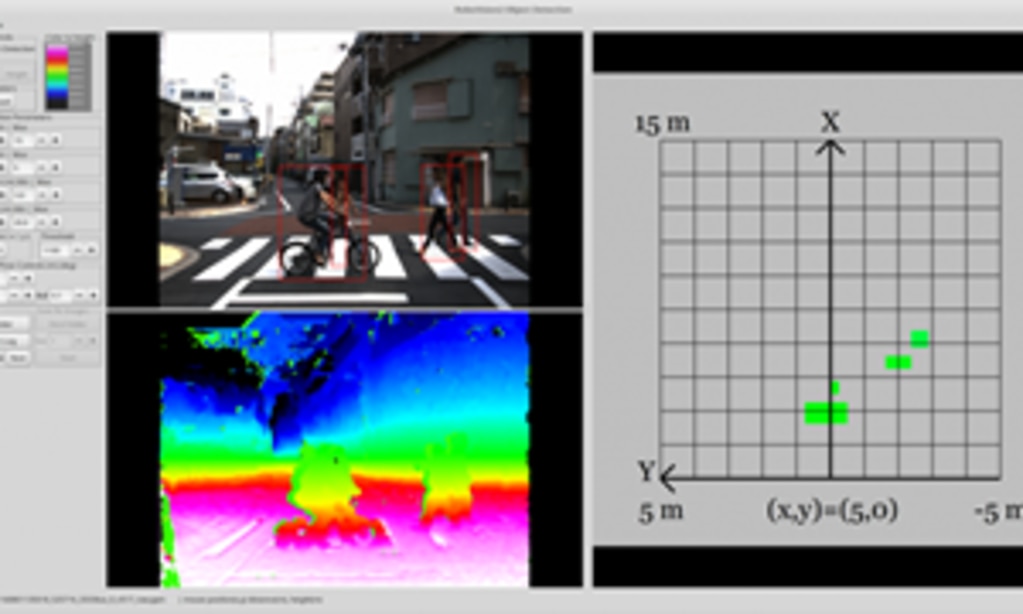

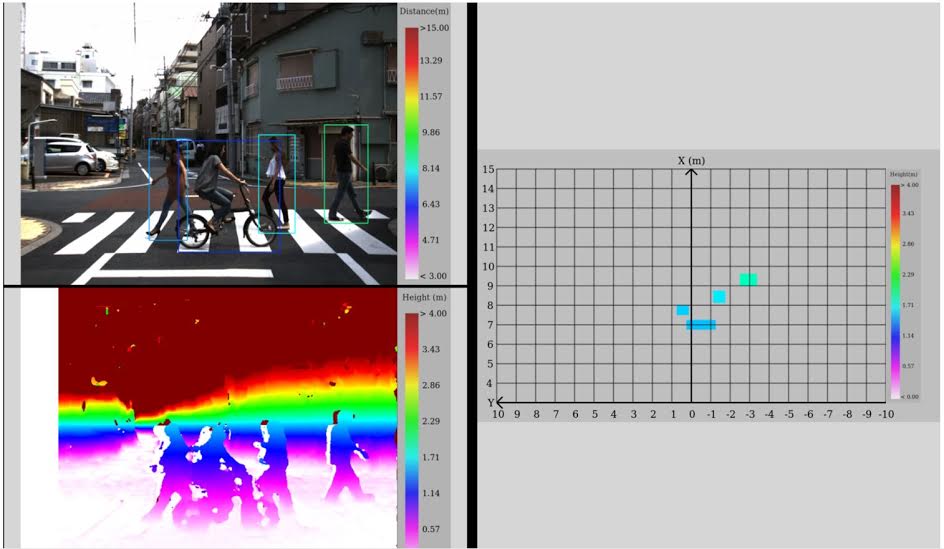

7-2.物体検出アルゴリズム

物体検出のアルゴリズムは、カメラの取り付け姿勢を指定することにより、計測画像における地表面(路面高さ)を算出し、路面高さに対して高さがあり点群(マッチングした点)が集まった領域に対して、物体があると判断し、その物体の幅、高さ、カメラからの位置を出力するアルゴリズムです。奥行き情報を考慮した物体の検出が行えるため、歩行者と自転車が重なった場合などにも、複数の物体として検出が可能です。現在はアルゴリズムを改良し検出を30fpsで行えるソフトも提供しております。

物体検出ソフトウェア 画面

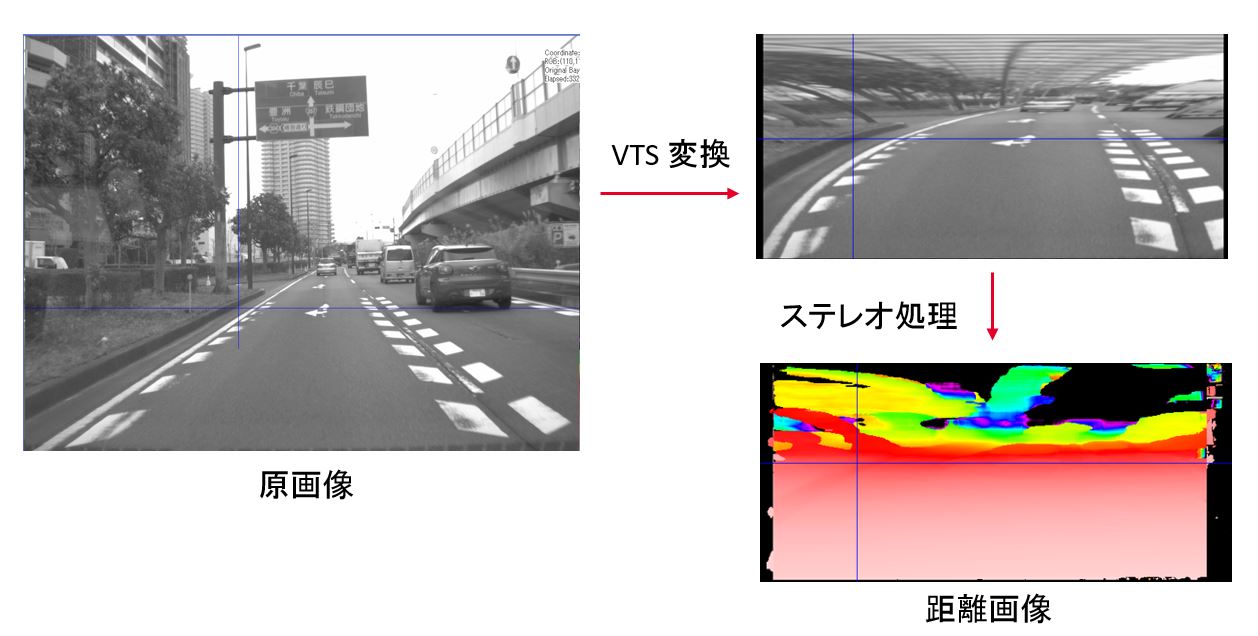

7-3.Virtual Tilt Stereoアルゴリズム

Virtual Tilt Stereoは、自動運転やADASの開発においてステレオカメラで道路上の車や障害物の検出を行うために開発されたアルゴリズムです。

そのためには、道路表面の検出が必要となり、従来のステレオカメラの検出手法を改善しています。正確な路面検出が出来ない理由として、通常車載で使うカメラは前方を向いており光軸と路面が平行に近くなるため高い精度の視差が得られないという課題があります。

Virtual Tilt Srereoアルゴリズムでは、パノラマ画像の合成技術を用いて、カメラの主点(節点)まわりに回転させて撮影&合成することで画像を回転し、光軸を下向きに変化させることで、上面から路面を計測することにより路面の計測精度を高める手法です。

これにより、路面上の凹凸検出精度が向上し、路肩の縁石や前方の車両など路面に対して高さがある物体を検出可能になりました。

そのためには、道路表面の検出が必要となり、従来のステレオカメラの検出手法を改善しています。正確な路面検出が出来ない理由として、通常車載で使うカメラは前方を向いており光軸と路面が平行に近くなるため高い精度の視差が得られないという課題があります。

Virtual Tilt Srereoアルゴリズムでは、パノラマ画像の合成技術を用いて、カメラの主点(節点)まわりに回転させて撮影&合成することで画像を回転し、光軸を下向きに変化させることで、上面から路面を計測することにより路面の計測精度を高める手法です。

これにより、路面上の凹凸検出精度が向上し、路肩の縁石や前方の車両など路面に対して高さがある物体を検出可能になりました。

8.ZMPのステレオカメラ関連製品

ZMPでは、専用の搭載冶具(車載用マウント、アダプター)を利用することにより車載カメラとして開発に活用可能なステレオカメラRoboVision2sや上記のアルゴリズムを活用したオプション製品を販売しております。また、最新ステレオカメラ機種として2つのステレオカメラを搭載した(クワッドカメラ)RoboVision3をご紹介いたします。

8.1 RoboVision2s

ZMP製RoboVision2シリーズは、基線長220㎜、イメージセンサーとしてソニー社製のCMOSセンサーを搭載した、インターフェースはUSB3.0接続に対応し、簡単に画像計測が可能な開発向け汎用ステレオカメラ。画像取得ボードからソフトウェアまでZMPにて設計・製造しています。PC用のソフトウェア、ステレオ画像ビューワも付属しており、すぐに評価が可能です。また、開発用にSDKも用意しており、ユーザーのやりたいことに応じてアプリケーションやソフトも開発可能です。

標準アプリケーションを搭載したPCにUSBを接続し、ソフトを起動することで、計測フレームレートを指定し、電子ファインダーのように画像(画角)を確認して計測可能。RoboVision2sでは従来のRoboVision2から光学ローパスフィルター追加によりモアレや偽色の影響を低減したモデルになっております。

標準アプリケーションを搭載したPCにUSBを接続し、ソフトを起動することで、計測フレームレートを指定し、電子ファインダーのように画像(画角)を確認して計測可能。RoboVision2sでは従来のRoboVision2から光学ローパスフィルター追加によりモアレや偽色の影響を低減したモデルになっております。

8.2 RoboVision2s 物体検出パッケージ

8.3 自動運転用ステレオカメラユニット RoboVision3

ステレオカメラユニットRoboVision3は、最新イメージセンサソニー製IMX390を搭載した、高画質な画像計測が可能なクワッドカメラ。ご要望(ご依頼)に応じてボディ(筐体)を取り付けた形でのご提供も可能。仕様・スペックについては製品ページをご確認いただければと思います。

製品のお問い合わせはこちら

© ZMP INC. All Rights Reserved.